Fine-tune your ML experiments with Comet Optimizer¶

Comet Optimizer is the component of the Comet platform that provides you with automated hyperparameter optimization for machine learning models with your chosen hyperparameter ranges and search algorithm.

Grid search, random search, and Bayes optimization are all supported by Comet Optimizer. You can learn more about each approach in our Hyperparameter Optimization With Comet blog post.

Comet Optimizer streamlines the process of finding the optimal set of hyperparameters for your ML experiments by offering a unified, framework-agnostic interface for all your workflows that seamlessly integrates with the experiment management capabilities offered by Comet Experiment Management.

Get started: Hyperparameter Tuning¶

Hyperparameter tuning with Comet Optimizer is a simple two-step process which aims to configure and run an Optimizer object instance.

Comet Optimizer is accessible both from Python SDK and CLI. The step-by-step instructions below use the Python SDK, but you can refer to the end-to-end examples to explore both options.

Prerequisites: Choose your tuning strategy¶

Before you begin optimization, you need to choose:

- The hyperparameters to tune.

- The search space for each hyperparameter to tune.

- The search algorithm (one of grid search, random search, and Bayesian optimization).

Additionally, make sure to refactor your existing model training code so that hyperparameters are defined as parametrized variables.

1. Define the Optimizer configuration¶

Optimizer accesses your hyperparameter search options from a config dictionary that you define.

Comet uses the config dictionary to dynamically find the best set of hyperparameter values that will minimize or maximize a particular metric of your choice.

You can define the dictionary config in code or in a .JSON config file. For example, you could define your configuration dictionary in code as:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

On top of these three mandatory configuration keys, the Optimizer configuration dictionary also supports the optional keys name and trials. You can find a full description of each configuration key in the Configure the Optimizer page.

2. Run optimization¶

The Optimizer configuration defined in the previous step is used to initialize the Optimizer object, e.g. by running:

1 2 3 | |

Under the hood, the Optimizer object creates one Experiment object per tuning run, i.e. for each automated hyperparameter selection.

You can then perform your tuning trials by iterating through the tuning runs with opt.get_experiments(). Within the scope of each tuning experiment, you can train and evaluate your models as you normally do but providing the parameters selected by Optimizer with the experiment.get_parameter() method. For example, the pseudocode below showcases how to run tuning experiments against the learning_rate and batch_size parameters:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Comet Optimizer logs your optimizer configuration in the Other tab of the Single Experiment page with the prefix "optimizer_".

We recommend also saving the optimizer parameters with Experiment.log_parameters() (or Experiment.log_parameter()) and optimizer metrics with Experiment.log_metrics() (or Experiment.log_metric()) so that they are also accessible in the Hyperparameters tab and Metrics tab respectively of the Single Experiment page.

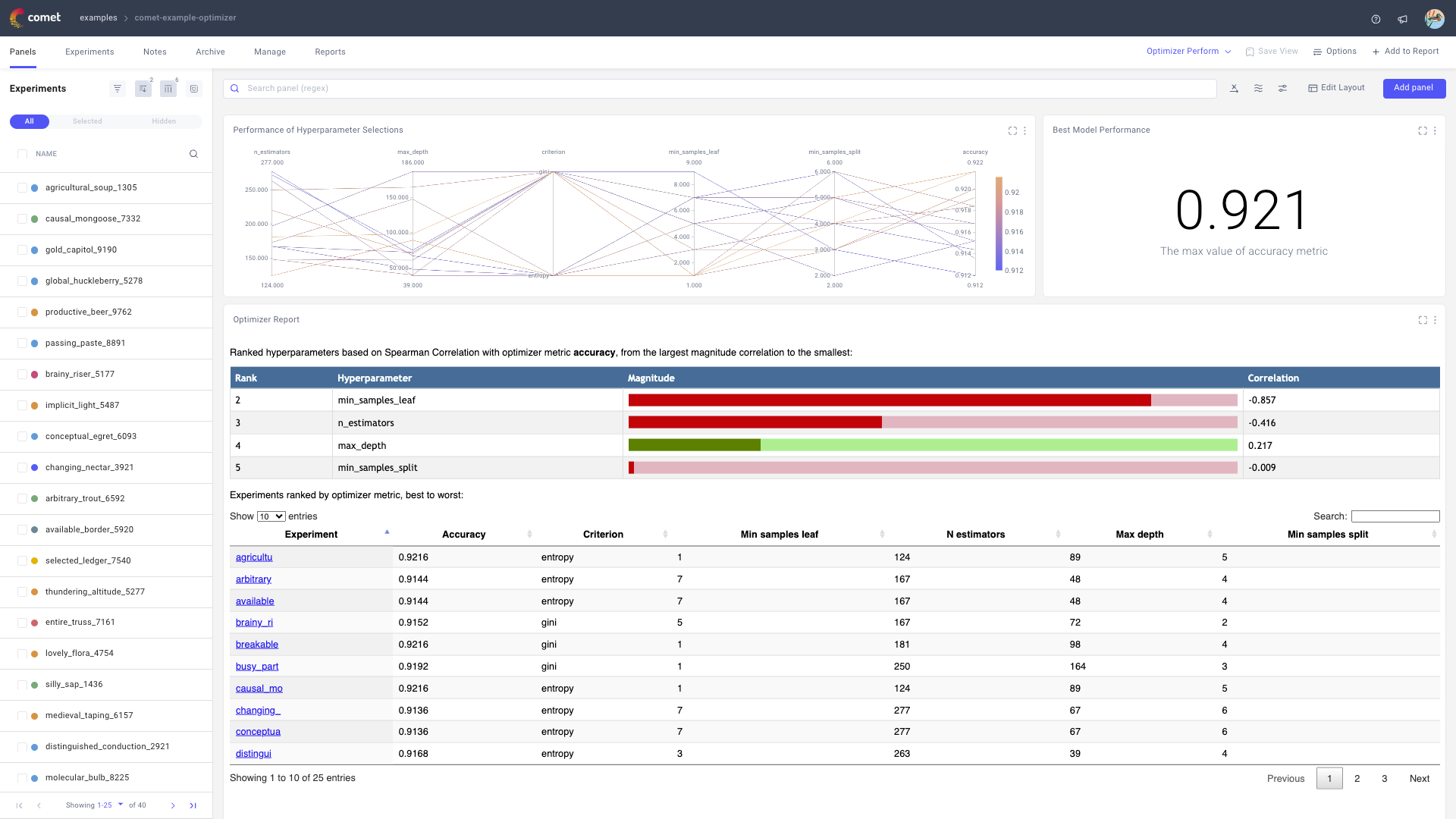

You can then navigate the results of a Comet Optimizer run as you would with any Comet ML Project containing multiple experiments. Learn more in the Analyze hyperparameter tuning results page.

Tip

Make your optimization runs more efficient through parallel execution!

Discover how from the Run hyperparameter tuning in parallel page.

End-to-end examples¶

Below, we provide an end-to-end example on how to run optimization with the Comet SDK, and references on how to run optimization from the Comet CLI.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | |

comet, the command-line utility that is installed with the Comet SDK, provides you with an optimize command to run hyperparameter optimization from command line defined as:

$ comet optimize [options] [PYTHON_SCRIPT] OPTIMIZER

where OPTIMIZER is defined from a dictionary, a JSON file, or an Optimizer ID.

For more information, please refer to comet optimize and the end-to-end example for parallel execution.

That's it! As you launch the optimization process, Comet Optimizer iteratively explores the hyperparameter space, and automatically logs the hyperparameter selection and optimization metric performance for the tuning experiment.

For this example, parameters and metrics are logged both in the Other tab of the Single Experiment page as well as in the Hyperparameters tab and Metrics tab respectively, thanks to Comet's built-in scikit-learn integration.

Try it now!¶

We have created two beginner demo projects for you to explore as you get started with Comet Optimizer.

Click on the Colab Notebook below to review the code for an example hyperparameter tuning run of a Keras model, managed with Comet Optimizer.

Additionally, you can explore the example Project created from running the end-to-end example in the Comet UI by clicking on the button below!