Log prompts and chains¶

CometLLM allows you to log prompts and chains for any third-party and custom LLM model or agent.

This guide provides you with the knowledge and step-by-step instructions you require to track your prompt engineering workflows with CometLLM.

Tip

OpenAI and LangChain have direct integrations with CometLLM so you do not need to explicitly log each prompt or chain when using them.

Find instructions in the Integrate CometLLM with OpenAI and the Integrate CometLLM with LangChain pages, respectively.

What are prompts, chains, and spans?¶

CometLLM defines three different objects that you can use to track your prompt engineering trials:

- Prompt: A specific input or query provided to a language model to generate a response or prediction.

- Span: An individual context or segment of input data within a chain of prompts. Note that spans can be nested.

- Chain: A sequence of spans, often used to guide the language model's generation process.

Consider using a prompt when tracking a single request for an LLM model, and opt for a chain when tracking a conversation or a multi-step request. Spans within the chain are beneficial for structuring complex chains into distinct parts.

Note

You can access any logged prompts and chains via the comet_llm.API submodule by getting their Trace. You can discover more about using traces from the Search & Export LLM prompts and chains page.

How to track your LLM prompt engineering¶

Tracking your LLM prompt engineering workflows is as simple as logging the inputs, outputs, and other relevant metadata for your LLM interactions.

Pre-requisites¶

Make sure you have set up the Comet LLM SDK correctly, i.e. by completing these two steps:

These steps are completed correctly if you can run successfully:

1 2 3 4 | |

with your API key and project<>workspace details.

1. Log your prompt engineering workflow¶

CometLLM supports both prompts and chains for your prompt engineering workflows.

Log a prompt to an LLM Project¶

The comet_llm.log_prompt() method allows you to log a single prompt to a Comet LLM project.

In its simplest form, you are required to only log prompt (i.e., the user request) and output (i.e., the model response), as showcased in the mock example below.

1 2 3 4 5 6 7 8 9 10 | |

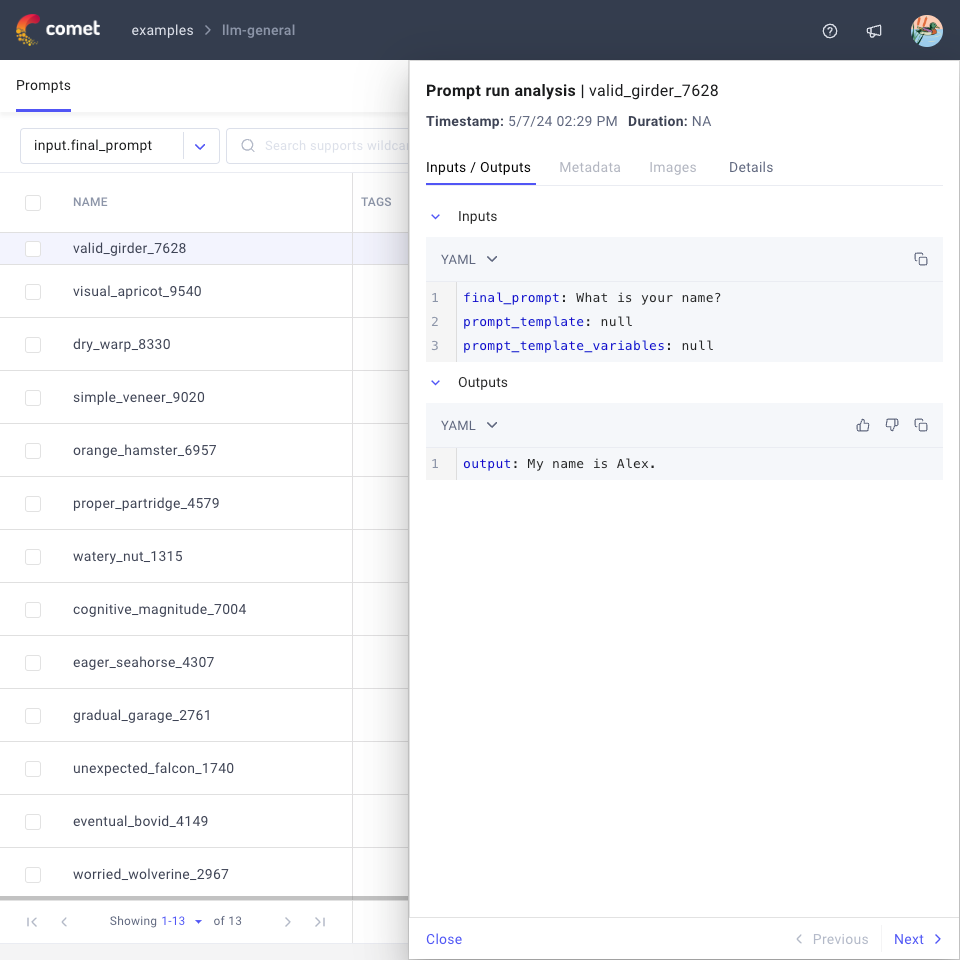

You can then access the logged prompt from the Comet UI as per the screenshot below.

Log a chain to an LLM Project¶

The Comet LLM SDK supports logging a chain of executions that may include more than one LLM call, context retrieval, data pre-processing, and/or data post-processing.

Logging a chain is a three-step process:

First, start a chain with the

comet_llm.start_chain()method:1 2 3 4 5 6 7 8

import comet_llm # Initialize the Comet LLM SDK comet_llm.init() # Start the chain user_question = "What is your name?" comet_llm.start_chain(inputs={"user_question": user_question})inputsis the only required argument and it is used to define all the inputs belonging to the chain as key-value pairs.Then, you can define each step in the chain as part of one or more

Spanobjects.Each span keeps track of the input, outputs, and duration of the step. You can have as many spans as needed, and they can be nested within each other.

In its simplest form, a chain is composed of one span containing a single model call, as showcased in the example below.

9 10 11 12 13 14 15

# Add the first span to the chain with comet_llm.Span( category="intro", # Any custom category name inputs={"user": user_question}, # A JSON-serializable object ) as span: model_response = "My name is Alex" span.set_outputs(outputs=model_response) # A JSON-serializable objectinputsandcategoryare the only mandatory fields of thespan.set_output()method.Tip

Select a

categorythat meaningfully conveys the logic of theSpan. You may also consider standardizingSpancategories across LLM projects.Finally, end your chain with

comet_llm.end_chain():16 17

# End the chain comet_llm.end_chain(outputs={"result": model_response})outputsis the only required argument and it is used to define all the outputs belonging to the chain as key-value pairs.

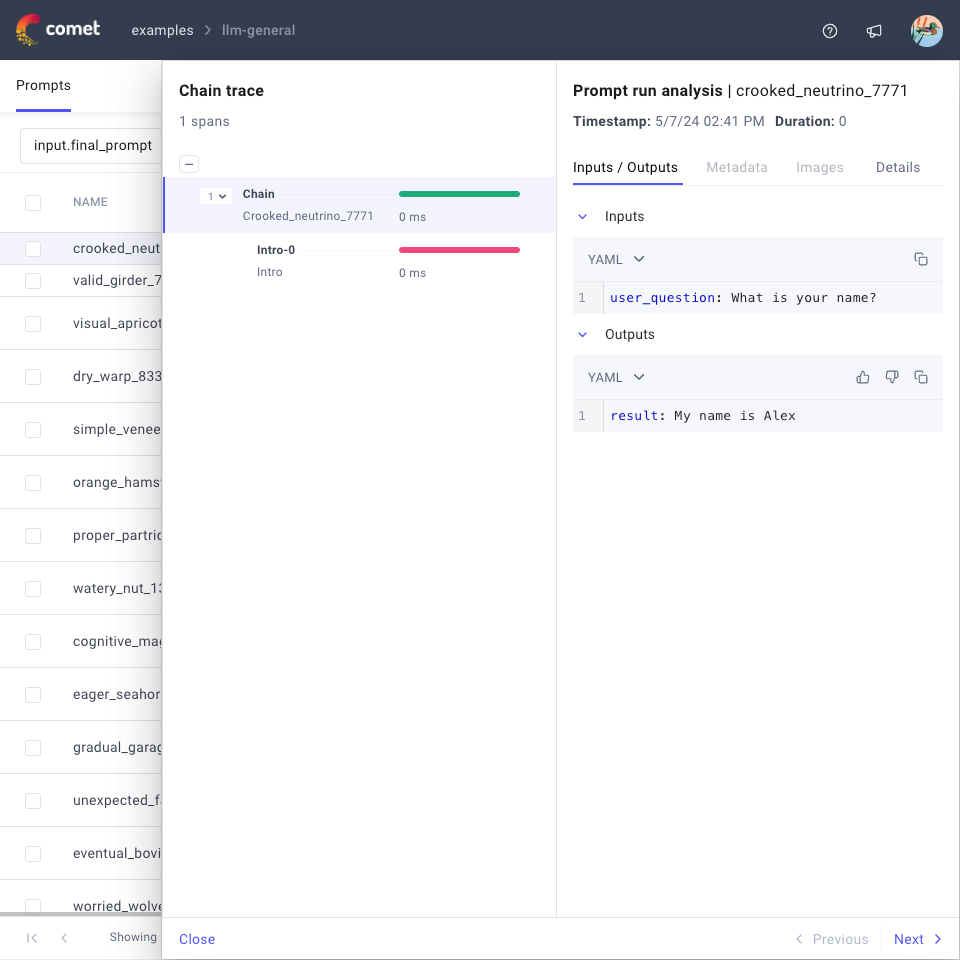

CometLLM uploads the chain to the LLM project for tracking and management only after ending the chain. You can then access the logged chain from the Comet UI as per the screenshot below.

2. Add metadata for your prompts and chains¶

All logging methods of the CometLLM SDK provide you with the ability to add metadata for your prompt engineering workflow by using:

- The

metadataargument to provide custom key-value pairs. - Built-in arguments of the SDK methods, such as

prompt_templatefor thelog_prompt()method.

The comet_llm module supports you in logging metadata when first running your prompt engineering workflow, while the comet_llm.API submodule supports you in updating metadata for existing prompts and chains (i.e., a trace).

Below we explore these options in detail and with examples.

Tip

Metadata is especially useful when comparing the performance of multiple prompts and/or chains from the Comet UI as part of a group by!

Log custom metadata via the metadata argument¶

CometLLM allows you to add any custom metadata to prompts, chains, and spans.

The SDK methods that support the metadata argument are:

For prompt: log_prompt().

For chain: start_chain(), and end_chain().

For span: init(), and set_outputs()

For trace: API.log_metadata().

Warning

If you log the same metadata keys when starting, ending, or updating a chain (or when initializing and setting outputs for a span), Comet only retains the last value provided for that key.

Below we provide examples for each of the two ways to add custom metadata in your prompt engineering workflows: when you first log your prompt engineering workflow, or to update an existing prompt or chain.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

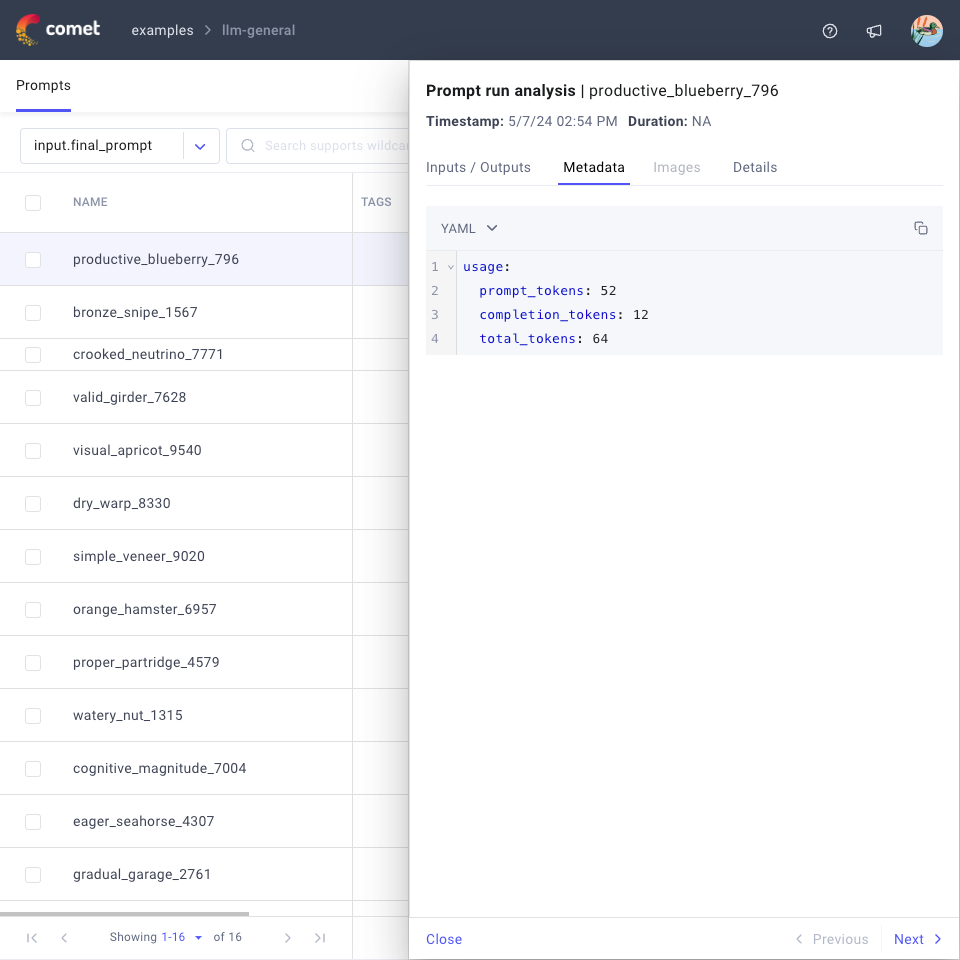

Your custom metadata is logged in the Metadata tab of the Prompt sidebar as showcased in the example screenshot below.

Tip

While span and chains automatically differentiate between input and output metadata depending on when you decide to save the metadata, you can mimic a similar behavior for prompts by adding an "input_" and "output_" prefix to the metadata keys.

Use built-in arguments to log details and I/O attributes¶

Many methods of the Comet LLM SDK provide built-in arguments that specify relevant details or input / output attributes, as summarized in the table below.

| LOG TYPE | SDK METHOD | BUILT-IN METADATA |

|---|---|---|

| prompt | log_prompt() | tags, prompt_template, prompt_template_variables, timestamp, duration |

| chain | start_chain() | tags |

| span | init() | category, name |

| trace | API.log_user_feedback() | score |

Note that:

tagsare a custom list of user-defined labels to attach to the prompt or chain.scoreis a user feedback score defined as a binary '0' or '1' value that you can add to any logged prompt or chain (i.e., a trace), which is useful as a way to keep track of the quality of the prompting trial.Tip

If a higher granularity in user feedback score is desired, you can log the score as a custom metadata attribute instead.

Below we provide examples for each of the two ways to add custom metadata in your prompt engineering workflows: when you first log your prompt engineering workflow, or to update an existing prompt or chain (with user feedback).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

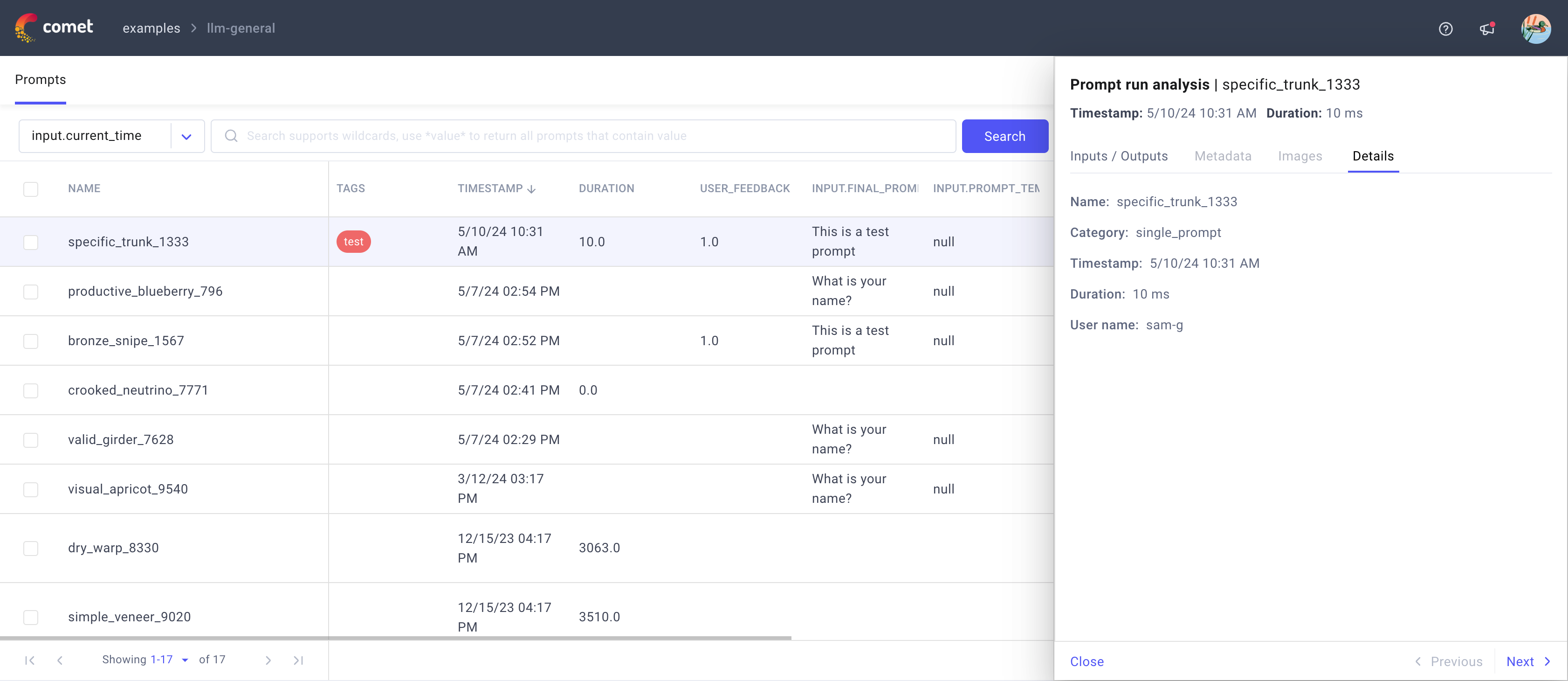

You can access logged I/O attributes and other details from the Comet UI in the Inputs / Outputs or Details sections of the Prompt sidebar, respectively.

End-to-end examples¶

Below you can find realistic end-to-end examples to inspire your LLM prompt engineering trials with CometLLM.

import comet_llm

import time

# Initialize the Comet LLM SDK

comet_llm.init()

# Define the prompt from template

prompt_template = "Answer the question and if the question can't be answered, say \"I don't know\"\n\n---\n\nQuestion: %s?\nAnswer:"

question = "What is your name?"

# Get the model output

# Note that duration and usage are likely to be returned by the LLM model call in practice

start_time = time.time()

model_output = "My name is Alex." # Replace this with an LLM model call

duration = time.time() - start_time

usage = {

"usage.prompt_tokens": 7,

"usage.completion_tokens": 5,

"usage.total_tokens": 12,

}

# Log the prompt to Comet with all metadata

comet_llm.log_prompt(

prompt=prompt_template % question,

prompt_template=prompt_template,

prompt_template_variables={"question": question},

metadata=usage,

output=model_output,

duration=duration,

)

import comet_llm

import datetime

import time

# Initialize the Comet LLM SDK

comet_llm.init()

# Define support functions for the chain reasoning

def retrieve_context(user_question):

if "name" in user_question:

return "Alex"

def llm_call(user_question, current_time, context):

prompt_template = """You are a helpful chatbot. You have access to the following context:

{context}

Analyze the following user question and decide if you can answer it, if the question can't be answered, say \"I don't know\":

{user_question}

"""

prompt = prompt_template.format(user_question=user_question, context=context)

with comet_llm.Span(

category="llm-call",

inputs={"prompt_template": prompt_template, "prompt": prompt},

) as llm_span:

start_time = time.time()

model_output = "My name is Alex." # Replace this with an LLM model call

duration = time.time() - start_time

usage = {

"usage.prompt_tokens": 7,

"usage.completion_tokens": 5,

"usage.total_tokens": 12,

}

llm_span.set_outputs(outputs={"result": model_output}, metadata={"usage": usage, "current_time": "potato"})

return model_output

# Start the chain

user_question = "What is your name?"

current_time = str(datetime.datetime.now().time())

comet_llm.start_chain(inputs={"user_question": user_question, "current_time": current_time})

# Define the chain with two spans

with comet_llm.Span(

category="context-retrieval",

name="Retrieve Context",

inputs={"user_question": user_question},

) as span:

context = retrieve_context(user_question)

span.set_outputs(outputs={"context": context})

with comet_llm.Span(

category="llm-reasoning",

inputs={

"user_question": user_question,

"current_time": current_time,

"context": context,

},

) as span:

result = llm_call(user_question, current_time, context)

span.set_outputs(outputs={"result": result})

# End the chain

comet_llm.end_chain(outputs={"result": result})

Tip

🚀 What next? Click on the Comet UI link printed by comet-llm when running the code snippets to access the examples in the Prompts page!